Last week, the Times Higher Education reported about a a paper rejection due to the fact that the two authors are female. Thankfully, this has ignited an outrage against the affected journal PLOS One, which in consequence ousted the anonymous reviewer.

The rejected study focuses on gender bias in academia and concludes that there is indeed a gender bias. In this context, the rejection gives proof to that in a stunning way. But besides joining the outrage, I would like to add my opinion, since gender bias (i.e., patriarchy) is a highly sensitive and complex topic.

As a matter of fact, the majority of scientists is male and it was not too long ago that women were not accepted to be scientists at all. Also, I hardly believe that the publication would have been rejected with the same argument when all authors would have been male. The reviewer’s phrasing does not seem to imply a gender-balanced author team. Instead, it seems to aim on a contribution of supposedly missing male opinions, which is a big difference. This can only mean that the reviewer assumes that male researchers are more objective than female ones and that the female interpretation is more prone to “ideologically biased assumptions” than the male one.

The other problem is that from a man’s view, patronizing is not an issue, since we are not getting patronized. But this is also a misinterpretation, since we (men) also are affected by a gender bias which expects males give their work first priority. I think, the cases of male scientists taking one or two years off to take care for their family are rarely seen. And why? – Because it would kill our career, which is exactly what is expected from women.

Last but not least, this event also demonstrates the power of social media in science. This discussion started with a tweet, exposing the biased devaluation and insult that many authors have to face from anonymous peer review. However, double-anonymous peer review might not always be an answer – many research areas are so small that it is easy to guess who might be the author, or the reviewer, respectively.

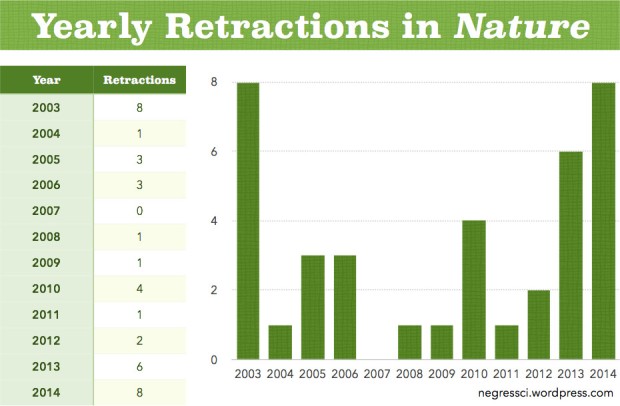

Judging from the impact of that story, it might be worth to start a project similar to the highly acknowledged Retraction Watch. Maybe something like a “Rejection Watch”, where biased and unfair reviewer comments can be dicussed openly.